Aerbits: A New Era of Intelligence

The Evolution Continues

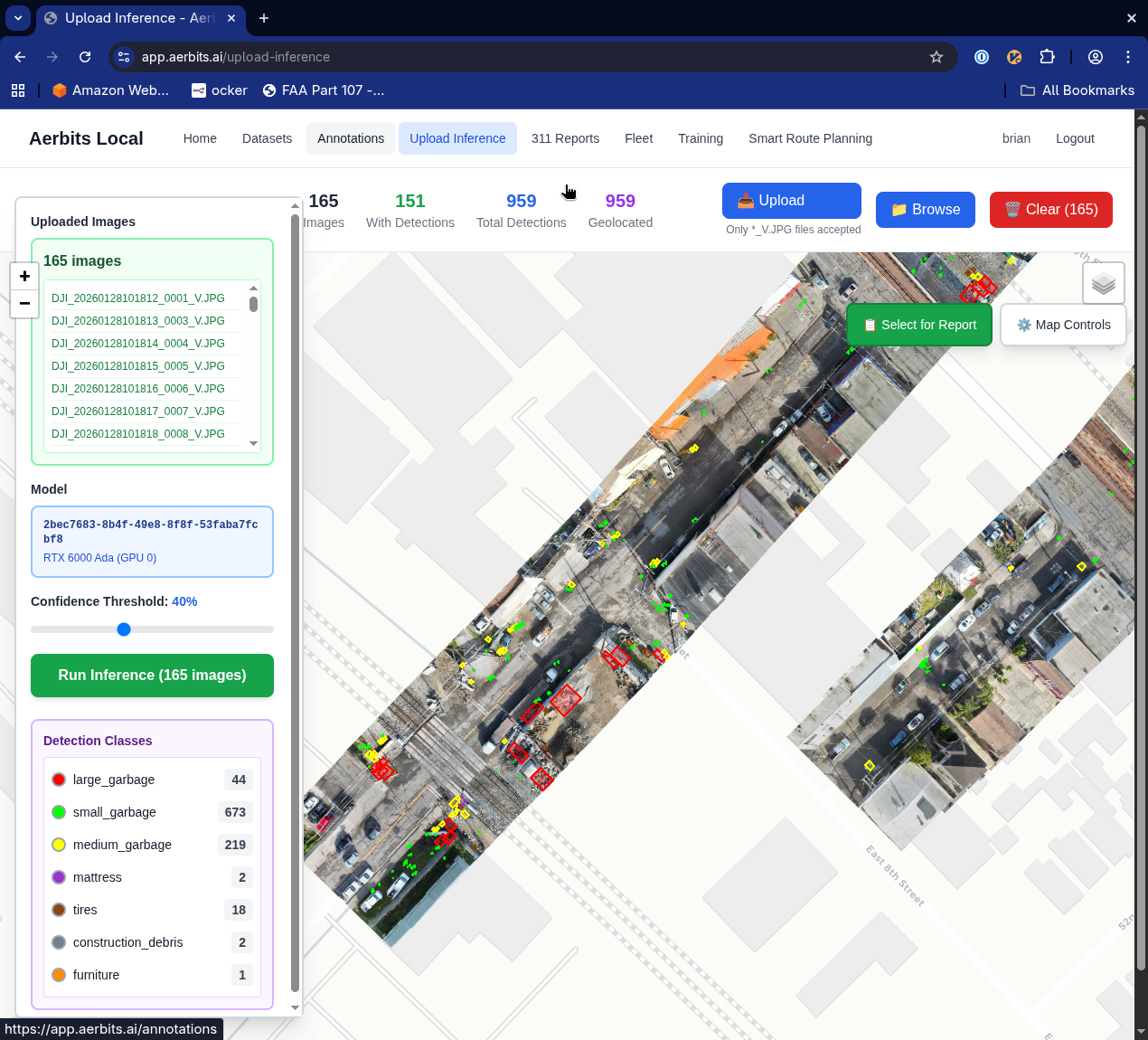

Three years ago, I started Aerbits with a simple mission: use drones and computer vision to detect illegal dumping in my San Francisco neighborhood. What began as a personal frustration with uncollected waste has evolved into a sophisticated platform that's pushing the boundaries of what's possible with aerial intelligence and modern AI.

Today, I'm rebuilding Aerbits from the ground up—not because the old system was broken, but because the field of computer vision has transformed so dramatically that we can now deliver capabilities I could only dream about in 2021. The combination of transformer-based detection models, segmentation networks, and vision-language models has created an inflection point for what field crews can accomplish with real-time aerial intelligence.

Rebuilding the Foundation

The first major undertaking was a complete backend rebuild. The original Aerbits architecture, while functional, was built during the early days of the project when I was still learning the problem space. Three years of operational experience revealed exactly where the bottlenecks were, which workflows needed to be streamlined, and what capabilities crews actually needed in the field.

The new backend is designed around a few core principles:

- Multi-dataset support - different projects, different clients, different detection classes

- Real-time processing pipelines for time-sensitive field operations

- Flexible model architecture - swap between YOLO, RT-DETR, and segmentation models based on task requirements

- Enhanced annotation and labeling tools for continuous model improvement

- Advanced segmentation capabilities for precise volume estimation and area calculations

This foundation enables everything else that follows.

The Model Renaissance

The pace of innovation in object detection and segmentation has been staggering. When I started Aerbits, YOLOv5 was state-of-the-art. Now, we're living in a world where RT-DETR (Real-Time Detection Transformer) delivers transformer-level accuracy at YOLO-level speeds, where YOLOv11 includes native segmentation support, and where models like SAM3 can segment virtually anything with minimal prompting.

I've integrated support for multiple model architectures:

- RT-DETR - Transformer-based detection that excels at small objects and challenging aerial perspectives

- YOLOv8, v9, v11 - The evolution of YOLO continues to impress with speed and accuracy improvements

- YOLOv11-seg - Instance segmentation that gives us pixel-perfect boundaries around detected objects

- SAM3 integration - For advanced segmentation workflows including box-based, semantic, and dataset-wide segmentation

Each model brings different strengths. RT-DETR shines when detecting small items from high altitudes. YOLO variants are blazingly fast for real-time processing. Segmentation models give us the precision needed for volume calculations and waste characterization.

From Detection to Understanding

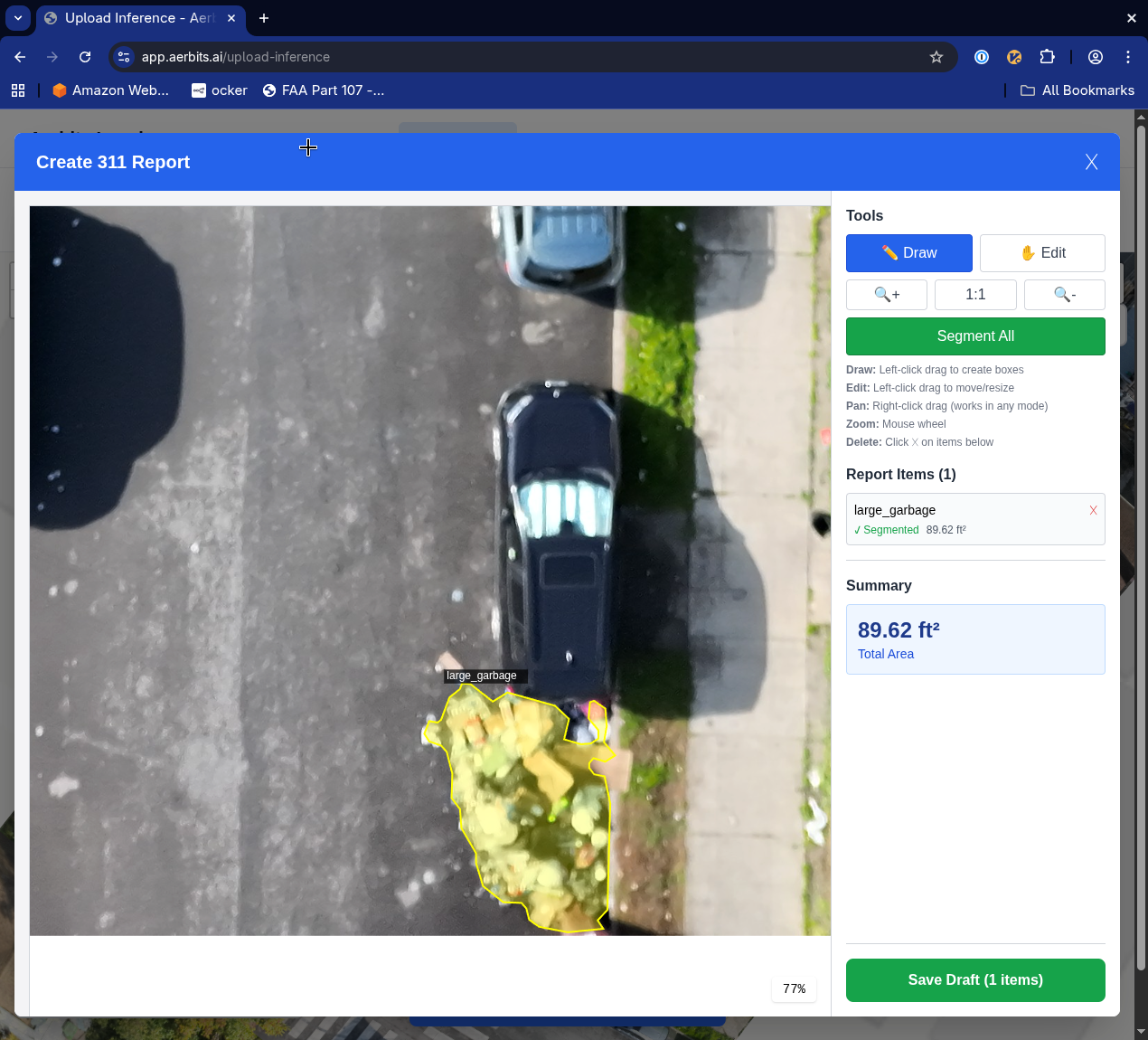

One of the most significant advances is the move from simple bounding boxes to full instance segmentation. When you can segment the exact boundary of a pile of waste, you can:

- Calculate the area covered with precision

- Estimate volume using depth cues from overlapping imagery

- Distinguish between multiple items in close proximity

- Track changes over time with pixel-level accuracy

- Generate better training data for continuous model improvement

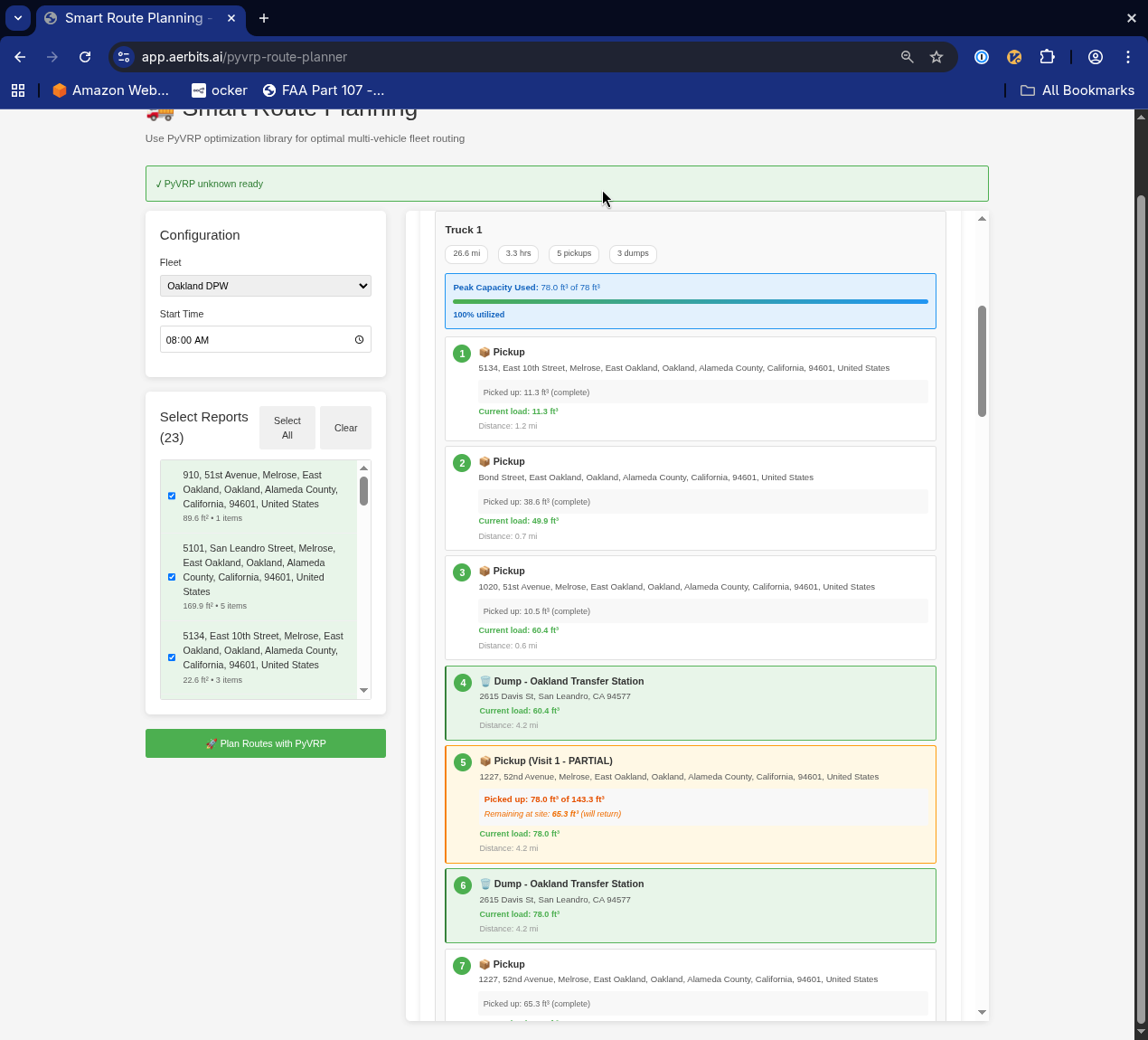

Instance segmentation provides the detailed information needed for volume estimation. By understanding the precise shape and area of detected waste, the system can estimate cubic footage and determine what type of vehicle and crew size is needed for pickup.

This level of detail transforms how field crews plan and execute cleanup operations. Instead of "there's trash at this location," we can now say "there's approximately 15 cubic feet of bulky items at this location, requiring a pickup truck and two crew members."

The Dataset Refinement Project

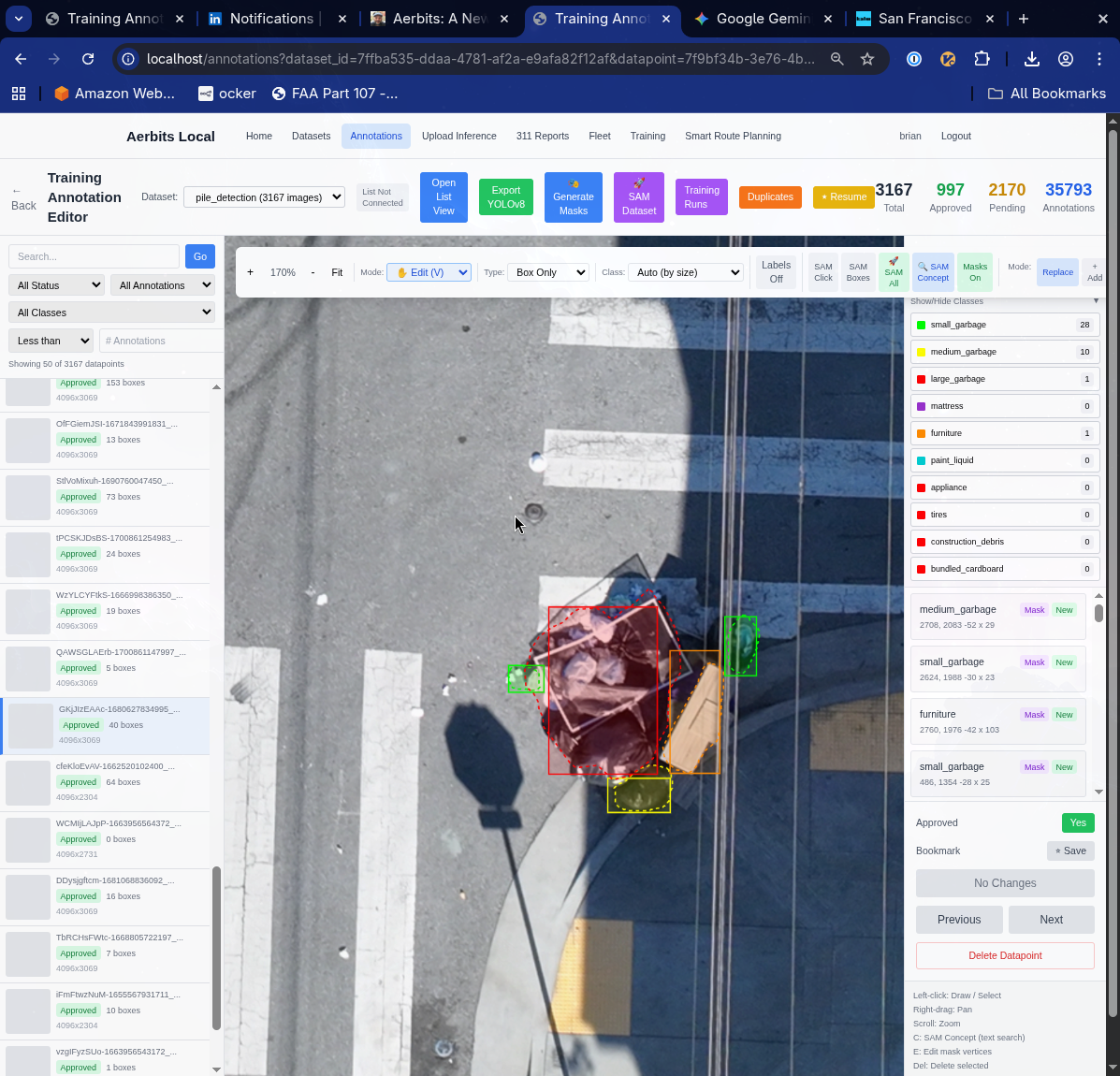

Perhaps the most important work happening right now is something that doesn't involve any new technology at all: I'm meticulously hand-correcting my entire historical dataset, applying three years of learned intuition about what matters for operational waste detection.

When I started, I didn't fully understand the nuances of what makes a good detection. I've now flown hundreds of hours, reviewed tens of thousands of images, and worked with crews who use these detections to plan their daily routes. That experience has taught me:

- Which types of items are actually actionable for cleanup crews

- How to distinguish between temporary and persistent dumping

- What level of detail is useful versus distracting

- How to handle edge cases like construction materials versus illegal dumping

- When context matters more than individual detections

This dataset refinement is tedious, manual work. But it's the foundation that everything else builds on. A model is only as good as its training data, and I'm now creating what may be the most comprehensive, thoughtfully annotated aerial waste detection dataset in existence.

Multi-Class, Multi-Dataset Architecture

The new Aerbits platform supports multiple detection classes and multiple datasets simultaneously. This is crucial for scaling beyond just waste detection:

- Waste classes: general trash, bulky items, hazardous materials, vegetation overgrowth, graffiti

- Infrastructure classes: potholes, damaged signage, street furniture needing maintenance

- Negative classes: areas to exclude from detection (more on this below)

Different projects can use different combinations of classes. A downtown cleanup operation might focus on trash and graffiti. A public works inspection might prioritize potholes and damaged infrastructure. The system flexibly handles whatever the task requires.

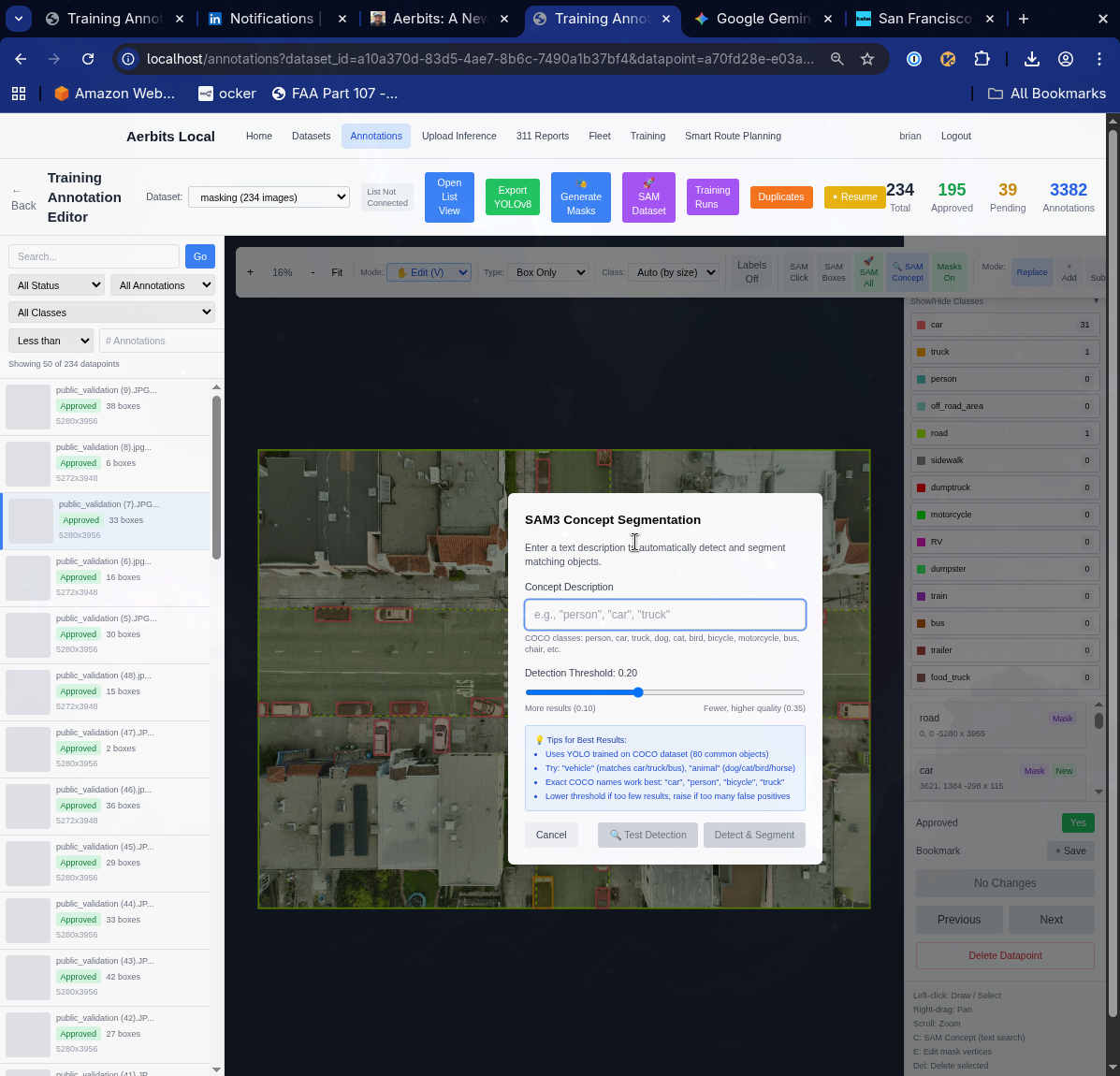

Intelligent Masking: What NOT to Detect

One of the more subtle but important innovations is the introduction of masking datasets. Not everything detected by the models should trigger a report. A pile of leaves in someone's private yard isn't actionable. Construction materials on an active job site aren't illegal dumping. Items on private property are outside the jurisdiction of public works crews.

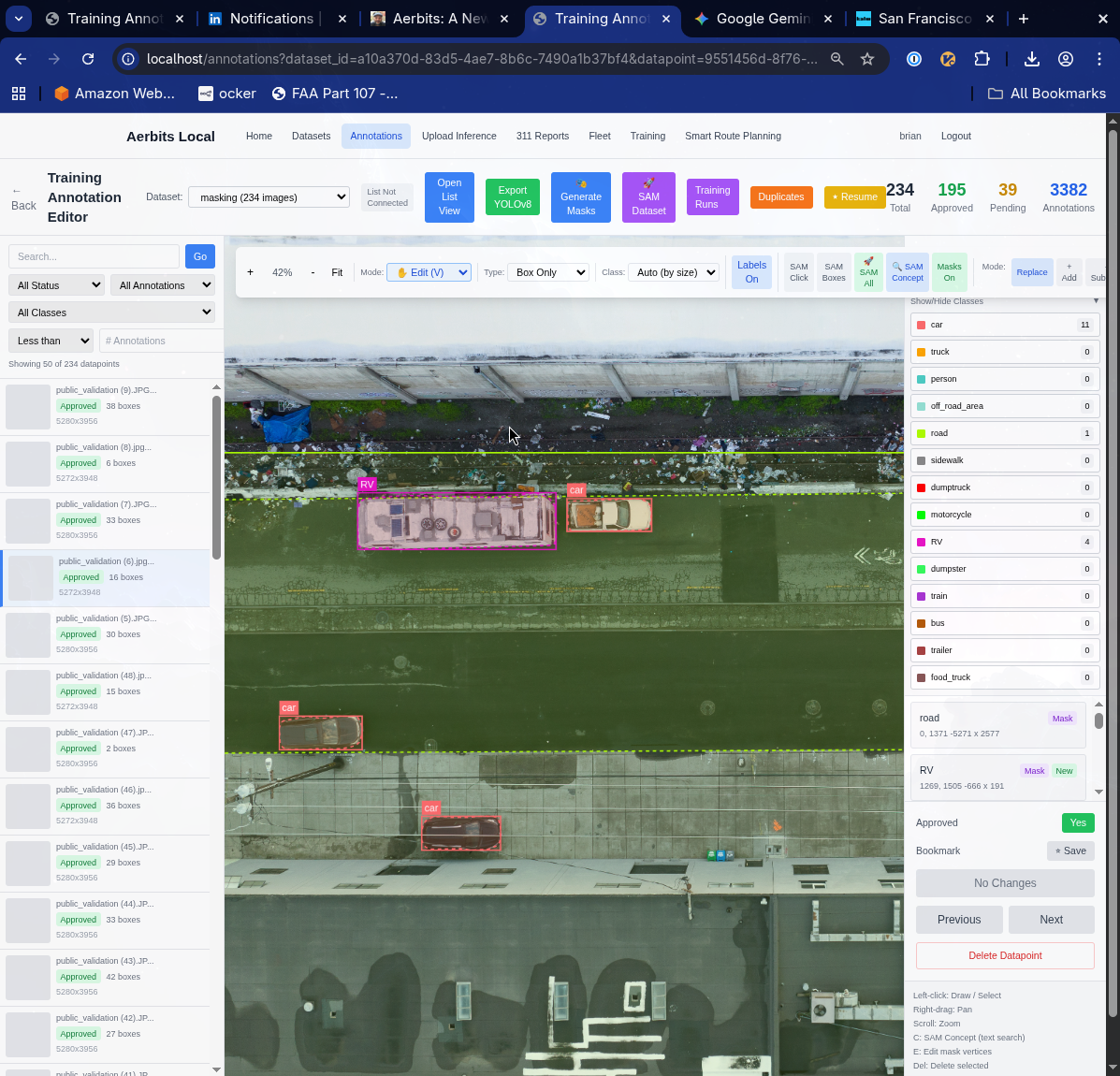

I've started building a masking dataset that segments everything except roads and sidewalks. SAM3's semantic segmentation capabilities make this process dramatically faster—I can segment entire categories of areas (buildings, yards, parking lots) with a few prompts rather than manually drawing thousands of polygons.

This allows the system to:

- Automatically filter out detections on private property

- Focus crew attention on public right-of-way issues

- Reduce false positives from areas outside city jurisdiction

- Respect privacy concerns while maximizing operational efficiency

This "negative space" approach—defining what to ignore rather than just what to detect—has proven remarkably effective. It's a pattern I suspect will become common in operational computer vision systems.

Tooling for Continuous Improvement

Modern computer vision isn't a "train once and deploy" proposition. It's a continuous cycle of deployment, feedback, annotation, and retraining. The new Aerbits platform includes substantially improved tooling for this cycle:

- Enhanced labeling UI - faster annotation with keyboard shortcuts, batch operations, and smart suggestions

- SAM3-powered semi-automation - click a point or draw a box, let SAM3 do the precise segmentation

- Semantic segmentation workflows - segment entire image regions with single clicks

- Dataset-wide segmentation - apply consistent segmentation rules across thousands of images

- Quality assurance tools - flag uncertain detections for human review

- Version control for datasets - track changes, compare model performance across dataset versions

These tools dramatically accelerate the improvement cycle. What used to take hours of tedious polygon drawing can now be accomplished in minutes with SAM3-assisted annotation.

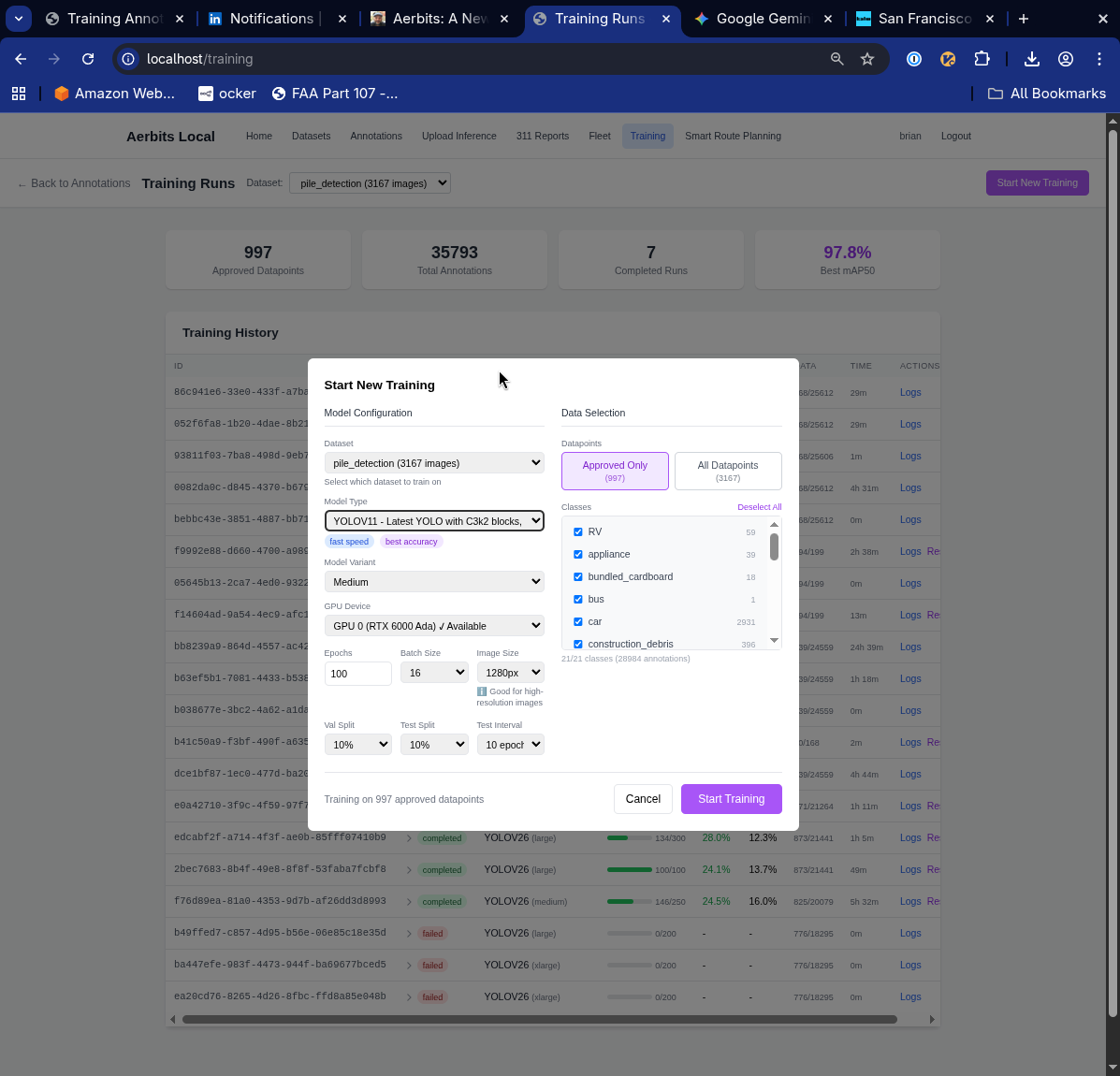

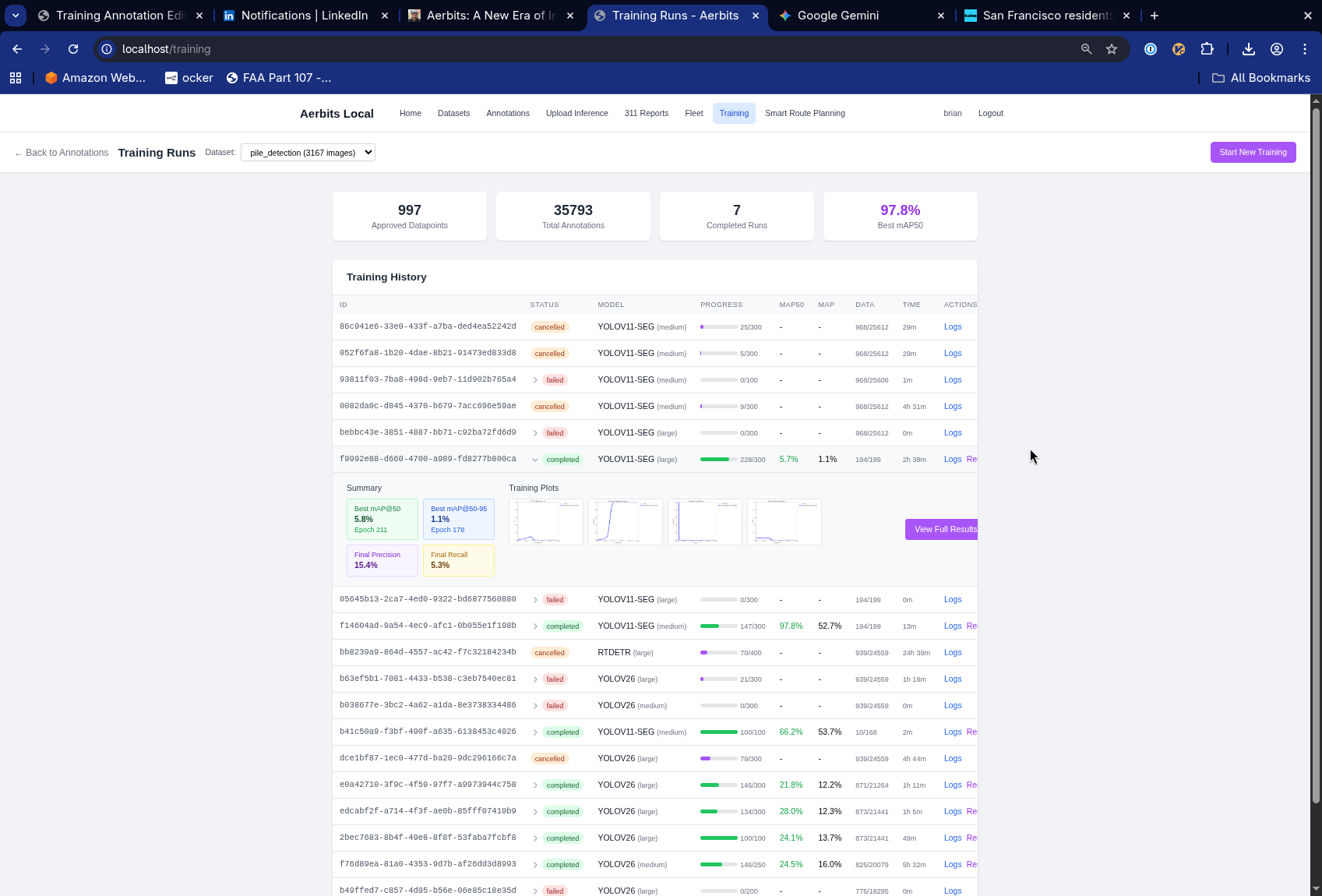

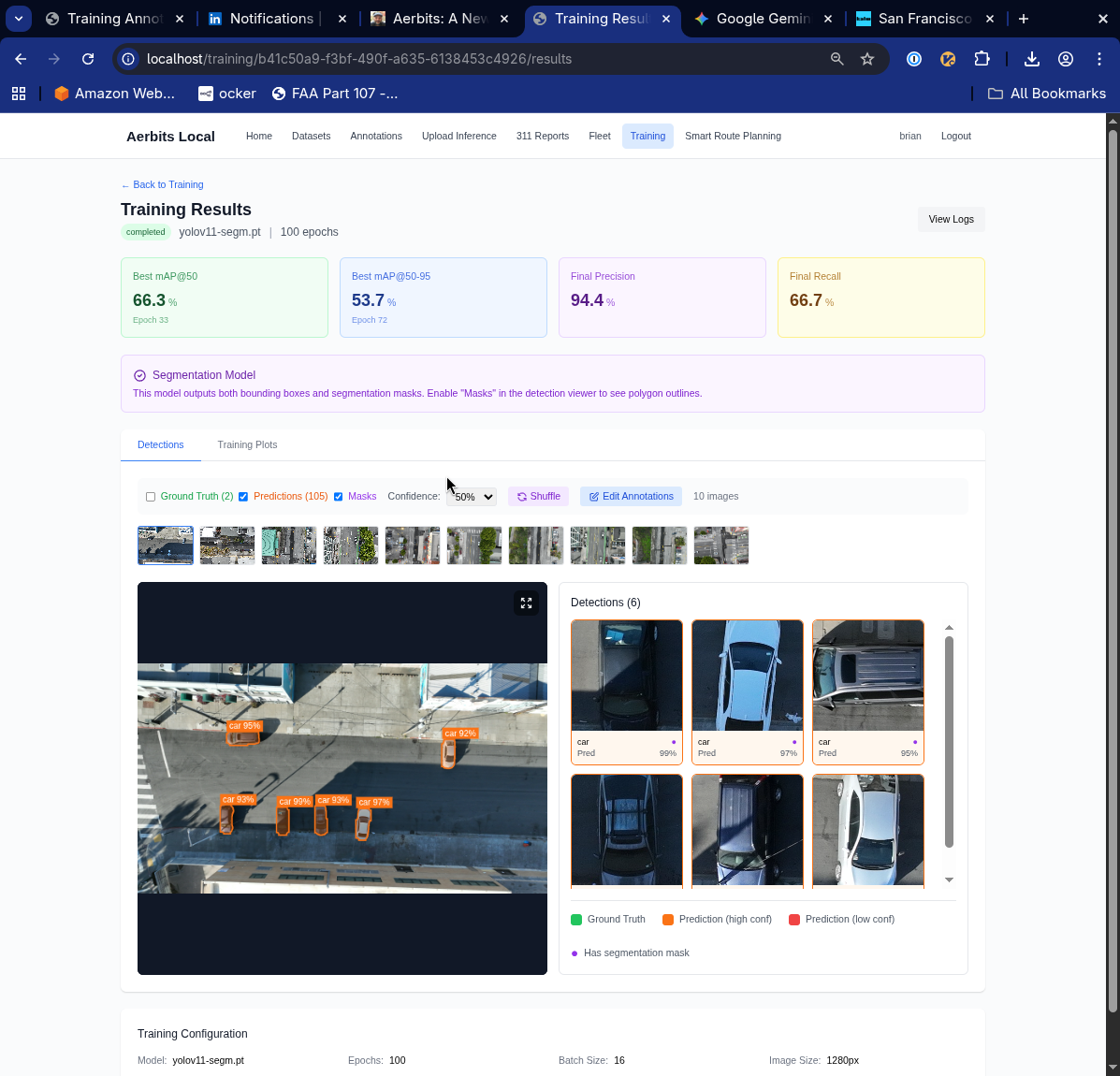

Once datasets are refined and annotated, the platform provides streamlined workflows for launching new training runs. You can select from multiple model architectures (RT-DETR, YOLO variants, segmentation models), choose which datasets to train on, configure hyperparameters, and track training progress—all from a unified interface.

The platform tracks all training runs in a centralized dashboard, making it easy to compare performance across different model architectures, datasets, and hyperparameter configurations. Each training run records metrics, timestamps, and configuration details for full reproducibility.

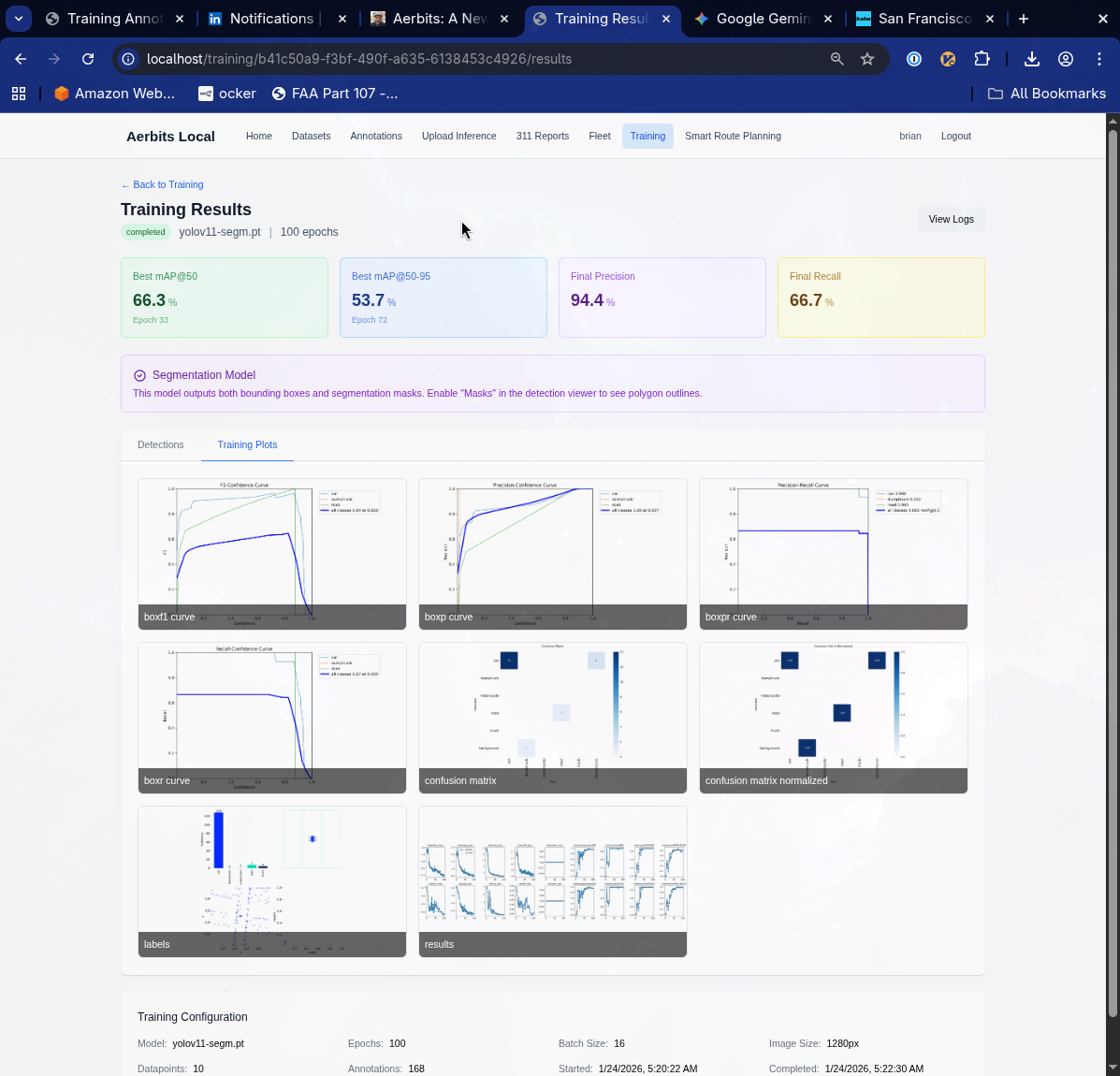

For each completed training run, detailed results are available including precision, recall, mAP scores, training curves, confusion matrices, and per-class performance metrics. This visibility makes it clear whether a new model represents genuine improvement or if the training needs adjustment.

Beyond numerical metrics, the platform provides visual inference results on the evaluation set. These visualizations show exactly what the model is detecting, where it's making mistakes, and how confident it is in each prediction. Seeing the model's outputs on real images is crucial for understanding whether improved metrics translate to better operational performance.

This end-to-end workflow—from data collection to annotation to training to deployment—enables rapid iteration. A new training run can be launched in minutes, evaluated against previous model versions, and deployed to production if it shows improvement. The speed of this cycle directly translates to better detection accuracy in the field.

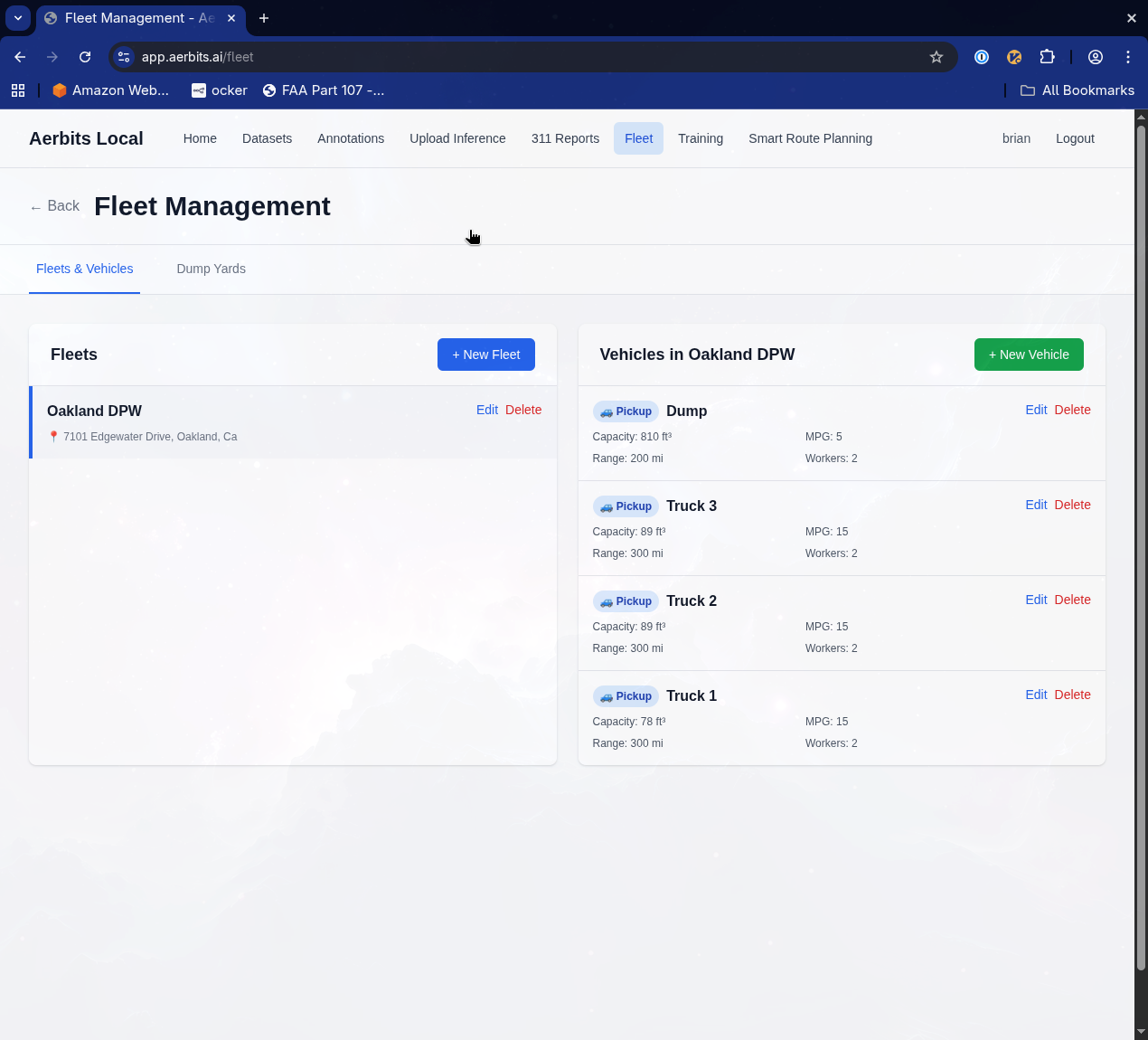

Fleet Management and Capacity Planning

Managing cleanup operations requires more than just knowing where the waste is—you need to understand your resources and match them to the work. The new Aerbits platform includes comprehensive fleet management capabilities that help coordinate multiple vehicles, track capacity, and ensure the right equipment is deployed to the right locations.

The fleet management system allows you to:

- Configure vehicle types with specific capacities and capabilities

- Assign vehicles to depots and define their operational areas

- Track vehicle availability and scheduling constraints

- Match waste types to appropriate vehicle equipment (compactors for loose trash, flatbeds for bulky items)

- Calculate total capacity needs based on detected waste volumes

This foundation enables intelligent route planning that accounts for real-world operational constraints—not just theoretical optimal paths, but routes that work with your actual fleet.

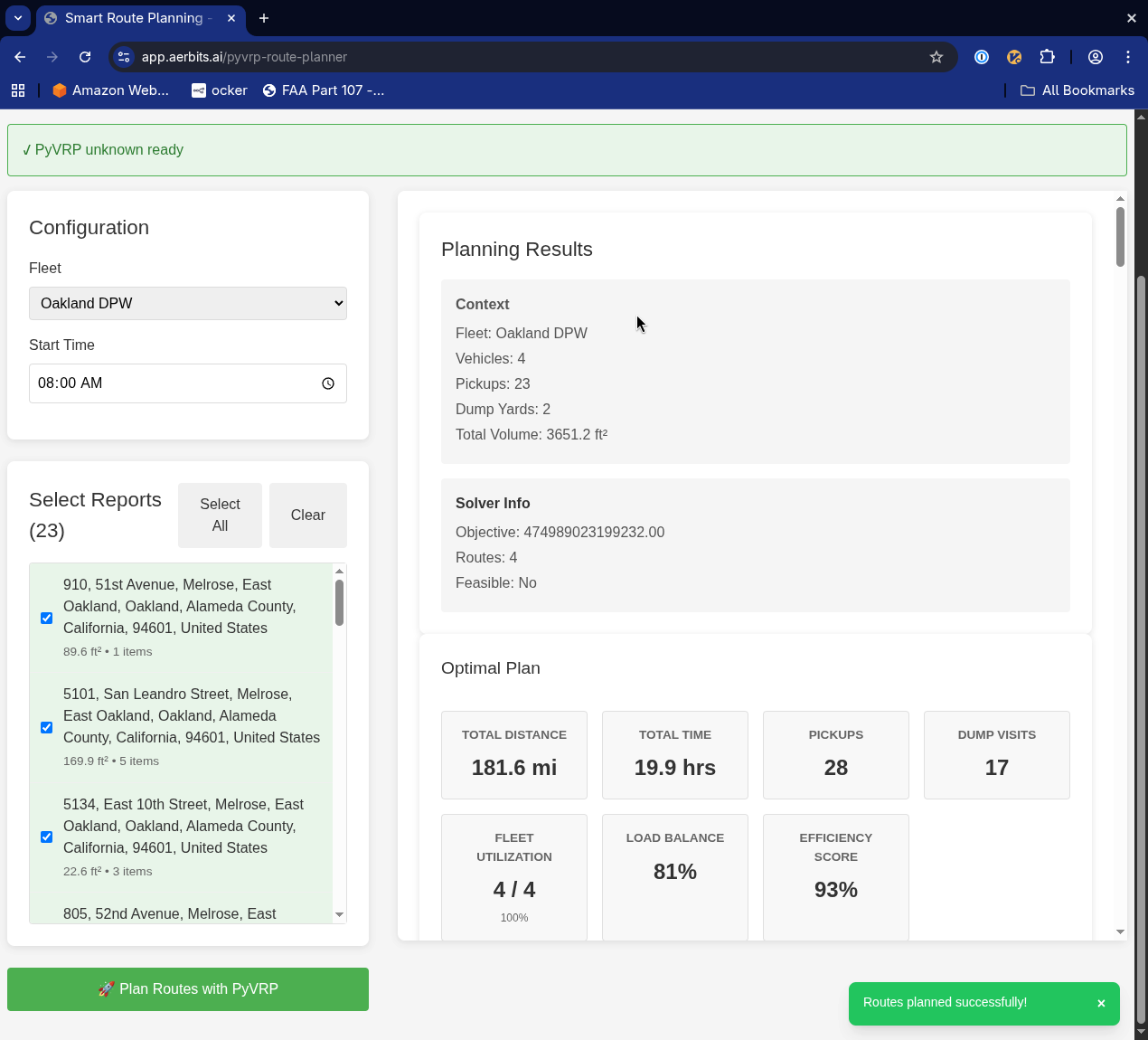

Route Optimization with PyVRP

With detections mapped and fleet configured, the platform uses PyVRP (Python Vehicle Routing Problem solver) to generate optimal pickup routes that maximize crew efficiency.

Given a set of detected waste locations, the system can:

- Generate optimal pickup routes considering vehicle capacity, crew size, and time windows

- Balance workload across multiple vehicles

- Prioritize based on waste volume, type, or community complaints

- Account for real-world constraints like one-way streets, traffic patterns, and dump site hours

- Continuously reoptimize as new detections arrive throughout the day

The route planner generates detailed plans showing total distance, time, pickup counts, and efficiency scores. It optimizes for both individual vehicle routes and overall fleet utilization.

This closes the loop from aerial detection to field execution. A crew starts their day with an optimized route showing exactly where to go, what to expect, and how to sequence their pickups for maximum efficiency.

Vision-Language Models: The Next Frontier

The most exciting frontier is the integration of vision-language models (VLMs). Models like GPT-4V and specialized VLMs can understand images in ways that go beyond simple object detection:

- "Is this an active construction site or illegal dumping?"

- "What type of vehicle would be needed to remove this item?"

- "Are there any safety hazards visible in this area?"

- "Has this pile grown since the last flight?"

While VLMs aren't yet fast enough for primary detection (RT-DETR and YOLO handle that), they're incredibly powerful for secondary analysis, quality control, and providing context that helps crews plan more effectively.

I'm experimenting with VLM-powered workflows for automated report generation, anomaly detection, and even drafting 311 submissions with detailed descriptions that a human can quickly review and approve.

What This Means for Cities

The technical improvements I've described translate directly into operational capabilities that didn't exist before:

- Proactive cleanup - Find and address issues before residents complain

- Data-driven resource allocation - Deploy crews where they're needed most

- Objective performance metrics - Track cleanup effectiveness with before/after aerial imagery

- Predictive maintenance - Identify areas prone to repeat dumping and address root causes

- Cost savings - Optimize routes, reduce unnecessary truck rolls, prevent small problems from becoming expensive ones

- Community impact - Demonstrably cleaner streets lead to increased pride, reduced crime, and improved quality of life

These aren't theoretical benefits. I've seen them in action in San Francisco, where consistent aerial monitoring and rapid response has transformed problem areas from dumping hotspots into clean, maintained spaces.

The Path Forward

The rebuild of Aerbits represents more than just technical improvements. It's a bet on what becomes possible when you combine three years of operational learning with the latest advances in computer vision and AI.

The original Aerbits proved the concept: yes, you can use drones and AI to detect waste at scale. The new Aerbits is about taking that proven concept and transforming it into a platform that gives field crews superhuman capabilities—perfect memory of every street, precise measurement of every pile, optimal routing for every shift, and intelligent context about every situation.

We're moving from "finding trash" to "understanding the urban environment." That's the new era of intelligence that modern AI makes possible.

And I'm just getting started.